You know what’s worse than a silent bug?

Getting hit with a $300 bill because you accidentally “vibed” too hard on an AI coding tool and didn’t know how tokens work.

If you’re using pay-per-use AI services like ROO, or spinning up Gemini sessions with your own API key, or testing random GPT APIs, you better listen up.

Because you are paying by the token.

And I guarantee you, you do not understand how much you are actually sending if you think you are “just asking a quick question.”

Today, we are fixing that.

What the Hell is a Token Anyway?

You are not charged by the request.

You are not charged by the chat session.

You are charged by tokens.

A token is part of a word.

Not even a full word sometimes.

For example:

- “ChatGPT is great!” → breaks into [“Chat”, “G”, “PT”, ” is”, ” great”, “!”]

- That is six tokens, not three words.

In English, a good rule of thumb is that one token is about ¾ of a word.

But if you are pasting in code or technical text, it gets even chunkier.

Variable names, symbols, and indentation all become tokens.

So when you type a “small” request, you might be sending way more tokens than you think.

What Actually Gets Sent to the AI

This is where it really punches you in the face.

When you ask a question to an LLM (Large Language Model), it does not only see your latest question.

It sees the entire context.

Context = every message in the conversation history.

Inputs + Outputs.

All the code it just wrote.

All the chat you had before.

It is like you are handing the model a giant stack of papers saying “Hey, read all this again before you answer my next question.”

Example:

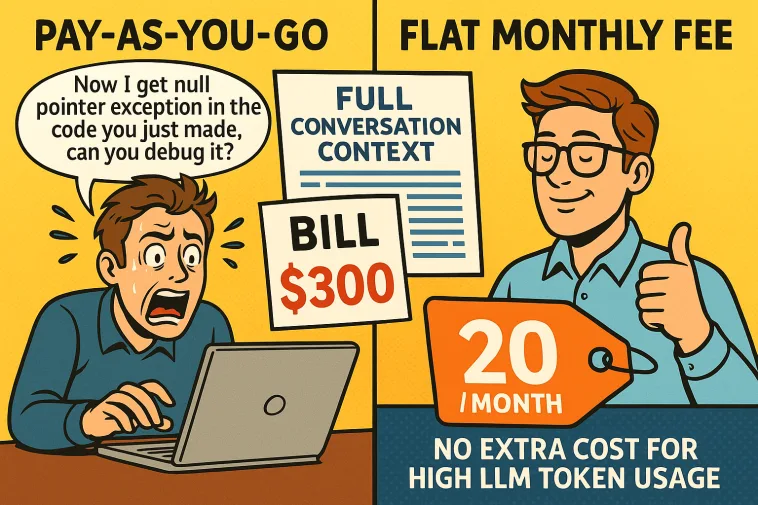

Let’s say you are coding and you do:

- Ask AI to generate 200 lines of backend code.

- Ask “Hey, why is my code throwing a null pointer exception?”

What actually happens under the hood:

- Your original question = 10 tokens

- The generated code = 5,000 tokens

- Your new “debug” request = 15 tokens

Total tokens being sent = 5,025 tokens

And guess what?

You pay for all of it.

Not just the tiny debug question you typed.

The bigger the history, the more expensive every tiny follow-up becomes.

The Pay-Per-Use Trap

Most services bill you something like:

- $1.25 per million input tokens

- $5.00 per million output tokens

It sounds cheap when you hear “million” but remember what we just said.

If your context plus your output are a combined 5,000 tokens for each question, you are eating through:

- 5,000 input tokens

- 5,000 output tokens (if the AI answers in kind)

That is 10,000 tokens per exchange.

At $6.25 per 1M tokens combined, 10,000 tokens cost about six cents.

Still sounds cheap, right?

Now do that 300 times while coding all day.

Now multiply that by a larger context because you asked it to “remember” even more stuff.

Now add retries when it gets the answer wrong.

Now watch your daily coding session accidentally rack up $300+ without you noticing.

And your only reminder is a sad Stripe email at the end of the month.

Real Horror Stories

People using ROO, vibe coding all day with their own Gemini 1.5 Pro API keys, end up stunned when they check their billing console.

$300.

$450.

One guy I read about racked up almost $800 just “building a prototype.”

Not because he was an idiot.

Because nobody explains that every little vibe message is carrying the weight of the entire conversation with it.

You are not asking “just one more thing.”

You are resending a goddamn novel every time you press Enter.

Flat Fee AI: Why It Might Actually Save You

Flat fee models look expensive up front.

$20 a month?

$50 a month?

$100 a month?

But here is the thing:

Once you hit any kind of serious usage, flat fee models stop being expensive.

They start saving your ass.

You know your cost.

You do not get surprised.

You can vibe code all day, blast 50,000 tokens back and forth, and never pay a cent more than your subscription.

If you are building a real app, coding every day, or doing long context research, flat fee services are your best friend.

They protect you from your own “just one more tweak” addiction.

Don’t get got

- A token is not a request. It is every piece of every word, symbol, space, and code fragment.

- You pay for input tokens and output tokens combined.

- You pay for the full context every time you ask a question.

- Pay-per-use sounds cheap until you stack up thousands of tokens without realizing it.

- Flat fee is boring but safe.

If you are serious about building, flat fee wins.

If you are just poking around once a week, pay-per-use might be fine.

But if you are planning to code, create, or chat with an LLM for hours on end, pick your poison carefully.

Otherwise that “cheap” AI session is going to feel like you just bought a MacBook you can’t return.